Archive tree Drupal module

It was nice to get my shiny new theme mostly figured out last week. The blog is more mine than it has ever been.

The thing that I wanted next was a way to browse posts from the archive, by year and month. I recall trying this a few years back, finding it difficult, and giving up. I tried again for a few hours over a few days recently, and it is just baffling. Some people claim it can be done with a View, and it seems possible, but I have tried almost everything and it is not even close.

Examples from the Internet (people want this!):

- https://drupal.stackexchange.com/questions/215419/blog-monthly-archive-block-list-of-posts-grouped-by-year-then-month

- https://drupal.stackexchange.com/questions/238838/views-archive-page-showing-year-and-months-of-article-content-type

- https://drupal.stackexchange.com/questions/205581/how-to-show-year-months-in-archive-block

- https://drupal.stackexchange.com/questions/275848/view-of-blog-posts-grouped-by-year

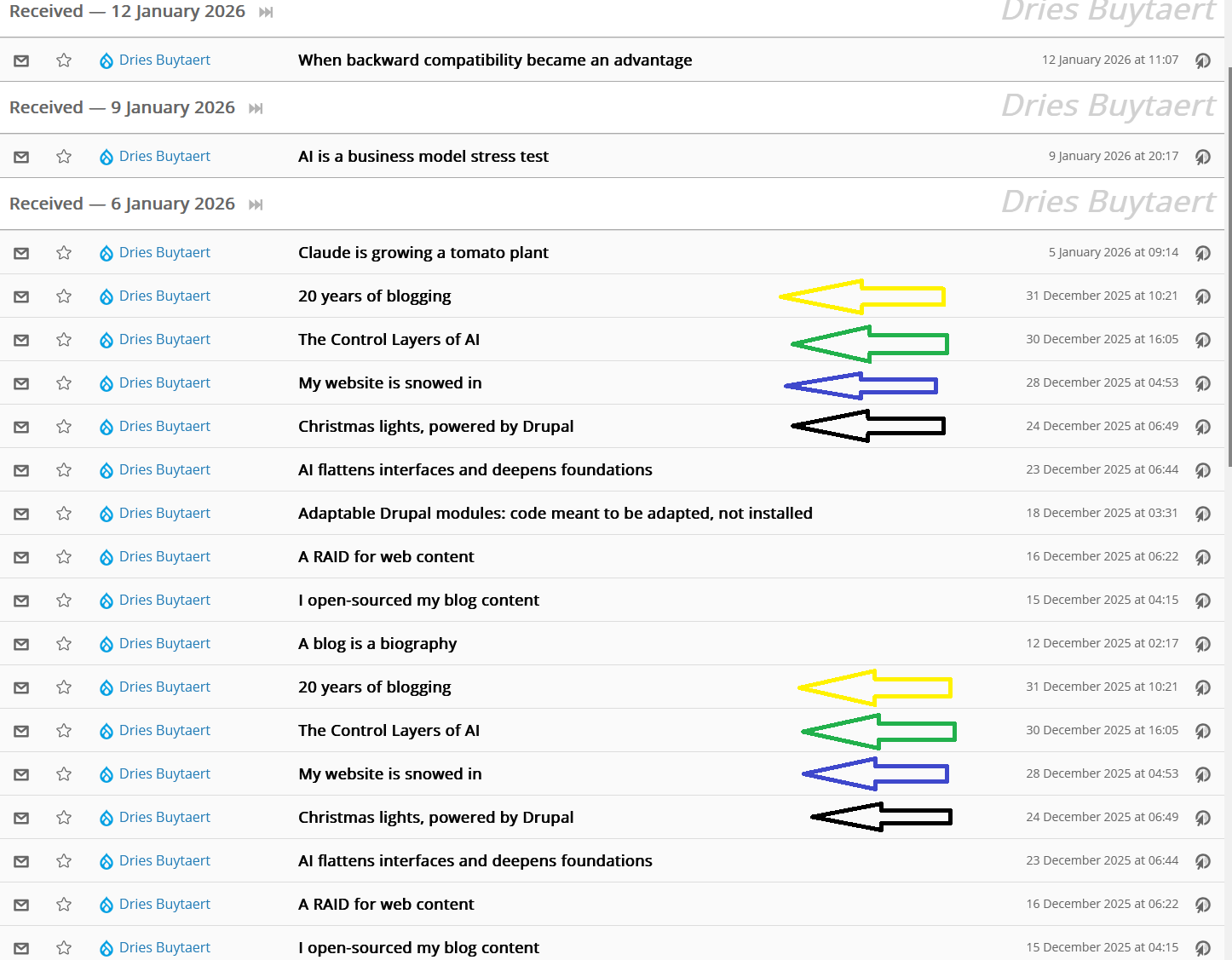

The goal is this, with years being able to expand/contract:

I believe this is an easy thing to do in Wordpress, as I have seen it occasionally around the Web. Possibly stock. It is impossible to find if you are looking for an example though! There is an obsolete Drupal module WP Blog which claims to have duplicated this view. In Drupal, the stock Archive module is a thing, but is kind of limited. It looks like this:

- October 2023 (3)

- November 2023 (1)

- December 2023 (8)

- May 2024 (3)

- July 2024 (1)

- etc. with pagination menu at the bottom

Limited indeed. Perhaps the ordering can be reversed to have most recent at the top. And one can (duplicate it and) customize it to some great extent. The years part of the good example above can be done. It took me a couple hours just to get that. I wrote that up with specifics for future-folk as the answer to someone else's question. This was exactly what they wanted. But I have always dreamed of having the months too.

So after hours more trying to get those months to show up right, it seemed impossible to do with available Views functionality. I investigated using the Views field view module but that seemed like a path to frustration. The years as one view, and under each year its own view of the months. Probably possible, but a terrible journey.

There are rumors that this can be done with Views accordion. Also a path to hell IMHO. There is no way that would turn out in just the right simple way. I tried, briefly.

Finally I just decided to try a custom module. LLMs should at least give me a fighting chance.

I had some terrible adventures with not being able to get the prototype plugin to load on my production blog. There was confusion between Annotation and Attribute techniques for decorating the block class. Eventually I realized it wasn't ideal to have root:root ownership on all the files for the whole blog. After I changed that to www-data:www-data then mysteriously there were schema updates that Drupal wanted to apply to the database. Wow I guess my site was really messed up by those permissions? At some point in here I did things the right way and set up a development environment on my desktop using ddev. That's a pretty slick stack--great experience.

So I got the module mostly developed using that dev instance. LLMs saved countless hours of time. It is such a relief to not have to continuously fight specific syntax details. I basically don't have to relearn PHP, knowing generic programming concepts gets me 98% of the way. Hopefully I know enough about Drupal and security that the module doesn't open me up to some epic hacking.

Anyways I had the module working on my dev site, but then I STILL wasted literal hours trying to get this custom module to deploy on my production blog. The module would install but the block would not show up in the Place Block screen and the views would not show up in...Views. Finally I realized that I still had an early prototype of the module in web/modules/custom/archive_tree, and I was installing the nearly finished version using composer (from my git repo) which was putting it in web/modules/contrib/archive_tree. Dangit!

Anyways, the module does exactly what I wanted it to do.

https://git.axvig.com/aaron.axvig/drupal-module-archive-tree