Artificial Condition by Martha Wells

Number two in the Murderbot series. I like the short format.

I have been watching the TV show too and enjoying it--7 episodes into the first season.

Microsoft Access was a great (in some ways) tool that allowed people to create CRUD apps. Very useful for business, and some computer aficionados even dabbled for their personal use.

The use of Access faded over time for various reasons. Later versions tried to bridge the gap to web apps.

Now we have LLM tools that allow people with similar computer skills to create web apps of similar functionality/complexity. Let's call it CRUD. This goes beyond it being a CRUD app--it is just CRUD.

I imagine CRUD will be about as useful as Access was. Why spend $40/employee/month for some light-duty ERP/CRM type thing that you have to meld the business too? The dashing hero entrepreneur leader of the business can crank out something custom in three weekends! This is naive thinking in some ways but it happens. I have to respect the "keep it small/local" implication of it. Not every business needs to send 10% of profits and 100% of customer data off to a big city software shop that will eventually have a multi-million customer data breach.

Anyways--let's compare Access and CRUDs across a few different?

Access was easy--form building, database schema, and reporting tools all built in to one program.

A LLM-generated CRUD is likely to be a web app with a lot of different technologies in play. HTML, CSS, JS, certs, ORMs, SQL, web servers, etc. LLMs really help with the speed of building, but one must still understand how it all works together. LLMs are also pretty good at teaching.

Security was an afterthought with typical Access use. The file itself could be password protected. No user roles.

A CRUD is unlikely to have robust access control. There will be temptations to roll one's own by doing fancy things like MD5 hash of passwords. But maybe it would use a pre-built authentication framework like is available in Django. There are good options.

It was common to store the Access file on a network share. Then any latency would cause performance issues.

In the world of CRUD, one is almost certainly going to build a web app and it will handle latency just fine.

Access would often crash due to network performance issues, and occasionally this would result in corruption. Hopefully you had a backup. I think multi-user access sometimes caused disasters too.

CRUD is likely to be built using a database, and probably an ORM. This type of boilerplate code is what LLMs excel at. SQLite or PostgreSQL/MySQL are going to be the backend in most cases. Data is not likely to get corrupted due to computer or network issues. BUT bad code could really mess up a database. Backups are still your friend.

If the Access wizard disappears one day, it is not likely that a small business has someone who can truly move the Access system along.

I think the same is true for a CRUD. Someone has to have a basic understanding of devops to properly maintain and deploy the app.

There are rumors of Access databases that have been migrated into Microsoft SQL Server and web front ends created. And I think later versions of Access facilitated that. It is probably easier to start over and import data.

Odds are good that a CRUD is already a web app with a proper database back end and can be taken over by a moderately experienced devops person once the business grows to a certain size.

I don't think Access had much to facilitate interaction with outside systems. In a CRUD you are going to have many option available.

I think CRUD will fill a similar role as Access used to. But it will not reach the popularity of Access because of the proliferation of SaaS competitors (themselves built on LLMs). Gains and risks to the business are about the same. It is a powerful tool and easy to wield improperly.

It was nice to get my shiny new theme mostly figured out last week. The blog is more mine than it has ever been.

The thing that I wanted next was a way to browse posts from the archive, by year and month. I recall trying this a few years back, finding it difficult, and giving up. I tried again for a few hours over a few days recently, and it is just baffling. Some people claim it can be done with a View, and it seems possible, but I have tried almost everything and it is not even close.

Examples from the Internet (people want this!):

The goal is this, with years being able to expand/contract:

I believe this is an easy thing to do in Wordpress, as I have seen it occasionally around the Web. Possibly stock. It is impossible to find if you are looking for an example though! There is an obsolete Drupal module WP Blog which claims to have duplicated this view. In Drupal, the stock Archive module is a thing, but is kind of limited. It looks like this:

Limited indeed. Perhaps the ordering can be reversed to have most recent at the top. And one can (duplicate it and) customize it to some great extent. The years part of the good example above can be done. It took me a couple hours just to get that. I wrote that up with specifics for future-folk as the answer to someone else's question. This was exactly what they wanted. But I have always dreamed of having the months too.

So after hours more trying to get those months to show up right, it seemed impossible to do with available Views functionality. I investigated using the Views field view module but that seemed like a path to frustration. The years as one view, and under each year its own view of the months. Probably possible, but a terrible journey.

There are rumors that this can be done with Views accordion. Also a path to hell IMHO. There is no way that would turn out in just the right simple way. I tried, briefly.

Finally I just decided to try a custom module. LLMs should at least give me a fighting chance.

I had some terrible adventures with not being able to get the prototype plugin to load on my production blog. There was confusion between Annotation and Attribute techniques for decorating the block class. Eventually I realized it wasn't ideal to have root:root ownership on all the files for the whole blog. After I changed that to www-data:www-data then mysteriously there were schema updates that Drupal wanted to apply to the database. Wow I guess my site was really messed up by those permissions? At some point in here I did things the right way and set up a development environment on my desktop using ddev. That's a pretty slick stack--great experience.

So I got the module mostly developed using that dev instance. LLMs saved countless hours of time. It is such a relief to not have to continuously fight specific syntax details. I basically don't have to relearn PHP, knowing generic programming concepts gets me 98% of the way. Hopefully I know enough about Drupal and security that the module doesn't open me up to some epic hacking.

Anyways I had the module working on my dev site, but then I STILL wasted literal hours trying to get this custom module to deploy on my production blog. The module would install but the block would not show up in the Place Block screen and the views would not show up in...Views. Finally I realized that I still had an early prototype of the module in web/modules/custom/archive_tree, and I was installing the nearly finished version using composer (from my git repo) which was putting it in web/modules/contrib/archive_tree. Dangit!

Anyways, the module does exactly what I wanted it to do.

https://git.axvig.com/aaron.axvig/drupal-module-archive-tree

I wanted to see what my mostly stock Drupal server was capable of. For this first test I am starting with a simple homepage load. I used the ab tool (Apache Bench). It couldn't be simpler! But it only downloads the HTML, not the rest of the assets. Here is one page load:

user@hostname:~/$ ab -n 1 -c 1 https://aaron.axvigs.com/This is ApacheBench, Version 2.3 <$Revision: 1923142 $>Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking aaron.axvigs.com (be patient).....done

Server Software: nginx/1.22.1Server Hostname: aaron.axvigs.comServer Port: 443SSL/TLS Protocol: TLSv1.3,TLS_AES_256_GCM_SHA384,256,256Server Temp Key: X25519 253 bitsTLS Server Name: aaron.axvigs.com

Document Path: /Document Length: 44878 bytes

Concurrency Level: 1Time taken for tests: 0.092 secondsComplete requests: 1Failed requests: 0Total transferred: 45304 bytesHTML transferred: 44878 bytesRequests per second: 10.87 [#/sec] (mean)Time per request: 92.023 [ms] (mean)Time per request: 92.023 [ms] (mean, across all concurrent requests)Transfer rate: 480.77 [Kbytes/sec] received

Connection Times (ms) min mean[+/-sd] median maxConnect: 3 3 0.0 3 3Processing: 89 89 0.0 89 89Waiting: 86 86 0.0 86 86Total: 92 92 0.0 92 92

45KB of data transfered. This doesn't really match what Firefox shows in F12 (18KB transfered, 62KB size), so I am confused. Anyways, now let's run it 5000 times.

user@hostname:~/$ ab -n 5000 -c 50 https://aaron.axvigs.com/This is ApacheBench, Version 2.3 <$Revision: 1923142 $>Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking aaron.axvigs.com (be patient)Completed 500 requestsCompleted 1000 requests<snip>Completed 5000 requestsFinished 5000 requests

Server Software: nginx/1.22.1Server Hostname: aaron.axvigs.comServer Port: 443SSL/TLS Protocol: TLSv1.3,TLS_AES_256_GCM_SHA384,256,256Server Temp Key: X25519 253 bitsTLS Server Name: aaron.axvigs.com

Document Path: /Document Length: 44878 bytes

Concurrency Level: 50Time taken for tests: 189.289 secondsComplete requests: 5000Failed requests: 3 (Connect: 0, Receive: 0, Length: 3, Exceptions: 0)Non-2xx responses: 3Total transferred: 226385060 bytesHTML transferred: 224255867 bytesRequests per second: 26.41 [#/sec] (mean)Time per request: 1892.886 [ms] (mean)Time per request: 37.858 [ms] (mean, across all concurrent requests)Transfer rate: 1167.95 [Kbytes/sec] received

Connection Times (ms) min mean[+/-sd] median maxConnect: 2 270 1141.2 3 35655Processing: 85 1491 2484.0 1450 60003Waiting: 83 1488 2484.0 1448 60003Total: 88 1761 2747.3 1473 63081

Percentage of the requests served within a certain time (ms) 50% 1473 66% 1519 75% 1563 80% 1614 90% 2281 95% 3852 98% 7524 99% 15358100% 63081 (longest request)

Three VMs are involved in servicing the requests to my desktop PC.

Yeah the physical hosts are nothing amazing. They aren't heavily loaded by other VMs at least. 5-10% average CPU usage.

It appears to be limited by the database server. This normally sits at 5-10% CPU usage, but runs at 100% during the test. 28 somethings per second seems alright.

Testing with some software that actually loads all the page resources is on my to-do list.

An enjoyable book with some interesting action. It is lacking in grand sci-fi concepts other than the sulfur stuff, which I thought for sure was going to be a cargo ship loaded with iron filings based on some commentary I had seen before reading. But there is a good amount of near-future envisionings: drone concepts, live streaming, climate impacts, etc.

The climate topics overall are a no for me. I don't identify with the people who dread climate impacts all day long in real life. So even though I think it is plausible for such things to happen, I guess I really do not care to read a book about it. I think I envision all these "dreaders" also reading the book and being like, "yes, see that is what will happen!" and want to roll my eyes so hard.

I wonder what topics I enjoy reading that would cause such eye rolling by others?

Lastly, I am really not sure what to think of the non-lethal border wars! Is there some kernel of this developing already that I don't know about?

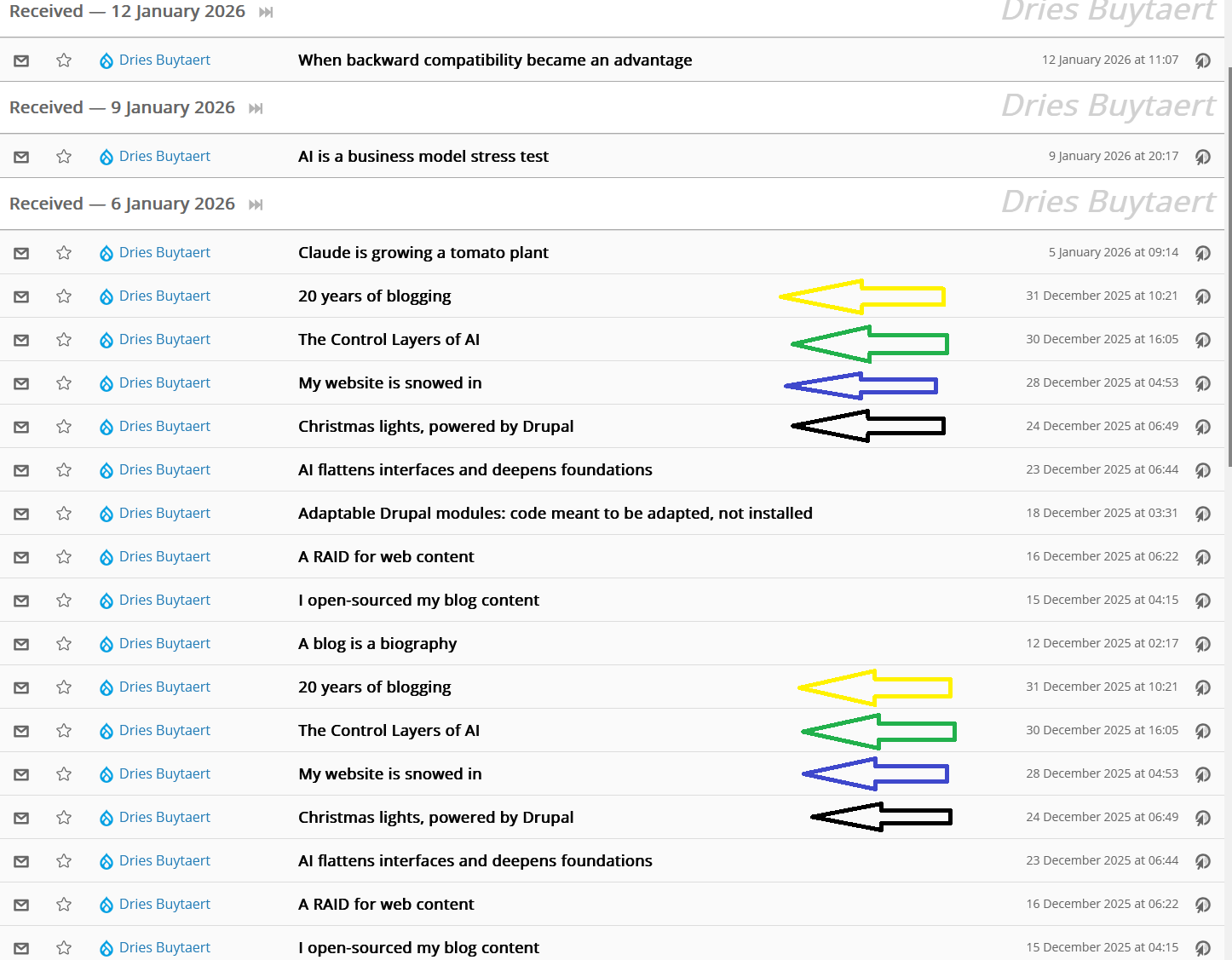

Since 2019 I have been running TT-RSS. I started with it on a small Digital Ocean node as I was living on a sailboat at the time. Once I had a house and ISP in 2021 it became one of ~10 VMs that I ran on an old laptop via Debian KVM/QEMU, and a couple years later when I started ramping up my Proxmox cluster and things moved over there.

Also of note: I run a separate PostgreSQL VM with a bunch of databases on it and the TT-RSS database is one of those. And I just run the PHP code straight in the VM, no extra Docker layer.

So a few months ago the notorious TT-RSS developer called it quits. I do believe that it will continue to be maintained, but I was pretty sure there are better options. The TT-RSS code is spaghetti. I am somewhat accepting of this because it is similar to the kind of code I tend to write. I am just an OK programmer. So the TT-RSS code is like the kind where you can easily find the lines that do real work, because there are 5,000 lines of it in one file. I have observed that "high-quality" code gets so abstracted out that it nearly all looks like boilerplate. Overall I don't mind the quality of the codebase but it is a signal.

Another issue was the Android app. I have been changing phone about once a year and there is a TT-RSS app somewhere (F-Droid?) that seems legit but doesn't work. This always bogs me down for a bit. Now the app is just discontinued? Probably I could figure it out but it shouldn't be that hard.

I didn't do a super thorough search. I wanted something self-hosted, database-backed, very OSS, etc. I found FreshRSS and the demo site seemed OK. There are lots of knobs to twiddle. There are extensions. The GitHub page looks lively. Someone wrote a script to port all feeds and articles from TT-RSS to FreshRSS. Might as well!

(I like to think of my feed reader as an archive of sorts. Maybe 20 of the feeds I have followed over the years have now gone offline entirely. It's kind of a hoarding thing, and kind of a nostalgia thing. I like it. So I wanted to keep all those articles.)

Back to that script. Here it is. It worked pretty well and I wrote up my experience in an issue (an abuse of the issue feature I'm sure, sorry!).

I used this script to migrate 125,000 articles from 436 feeds. A batch size of 60000 yielded 3 files of 456MB, 531MB, and 62MB. This ran in under 1 minute and pretty much maxed out the 4GB of RAM on my TT-RSS VM.

So with these file sizes I had to bump all the web server file size limits to 1000MB, PHP memory limit to 1200MB, and various timeout values to 300 seconds. This was fine for the first file, but the second one maxed out that memory limit so I reran with a 3000MB limit. Also by this point I was worried about the 300 seconds being enough so I bumped that to 3000 seconds. And that file ran fine then too. It shouldn't be hard for most people to get better import performance as my database VM storage is slow.

I imported into FreshRSS version 1.28.0. I see that your first patch has been merged, but the second one about the categories still has not. I opted not to figure out how to get the category import to work and will manually categorize them. Yes, all 436 feeds. First of all I will take the opportunity to categorize better than before. Also, I had nested categories in TT-RSS and I'm not sure how that was going to translate to FreshRSS anyways.

Thank you for the script!

Overall it went pretty smooth and I have been using it for about a week. I am happy with it! I have most of my feeds categorized and the dead ones "muted" (should be called "disabled" or something).

I did notice an issue with duplicate articles affecting a lot but not all feeds. Say a feed on a website gives the 10 latest articles. TT-RSS had pulled all 10 of those and had them in the database, along with say 90 past articles. So those 100 articles were in the TT-RSS database and were copied to FreshRSS. Then FreshRSS pulled the feed from the website and didn't recognize that those 10 articles already existed in the database, and stuffed them in again. So now there are 10 articles listed twice.

This was a little upsetting but after two days I don't really notice it anymore. I'm guessing that TT-RSS did something dumb with article GUIDs but could be wrong.

The FreshRSS UI is pretty good, definitely better than TT-RSS. So I have already set up two features that I just hadn't bothered with in TT-RSS. I have been disabling old feeds so that they don't show up as errored out. And I have set up a few feeds to automatically download full articles when they aren't included in the feeds. This is actually super simple, no extension needed.

I miss nested feed categories but don't really need them.

I would love to save article images to my personal S3-compatible archives but there doesn't seem to be an extension capable of doing this.

I haven't set up a mobile app yet. I have heard the website is good enough on mobile so will try that first.

I am not using any extensions yet.

I moved my Digikam database from the local SQLite file to a networked MariaDB server. This was mostly fine but Digikam (8.8.0 version) was performing terribly after any face tagging operation.

I would get frequent Force Quit or Wait? questions from the OS, and the UI would hang for 5-10 seconds. This was always matched with a face scan job kicking off and showing up in the jobs queue. Everything generally worked but was incredibly tedious. ~20,000 images in my collection, BTW.

A few times I went through the DB's config and optimized some things, which helped a little. Typical tuning things like increasing memory and threads, and bumping up max_allowed_packet as recommended. Still the issue persisted.

Eventually I learned that there is a Enable background face recognition scan setting. It seems obvious in retrospect, but this completely fixed the issue. I will just need to periodically rescan for faces now to apply what training has been done since the last run.

I recently purchased a used SuperMicro SuperServer E300-9A-4C because I had come upon a bunch of 64GB ECC RDIMM sticks from the e-waste pile and supposedly it could handle four of them. This makes for a power efficient way to get 256GB of RAM in a homelab node, although it is a bit weak on CPU at 4 cores. I would have preferred a SYS-5019A-FTN4 with 8 cores but couldn't find used ones for sale.

The used server from eBay of course did not come with a power supply so I had to order one and wait a while. Finally that arrived so I stuffed in 4x Samsung M393A8G40AB2CWE sticks and booted up the Proxmox installer. After a very long wait for memory training or something, it would get to the first screen where I could choose graphical or terminal install. And then after choosing either one I would get the "Loading initial ramdisk" message and then it would freeze at a black screen.

Eventually I found some errors in the IPMI's Server Health Log.

Uncorrectable ECC @ DIMMB2 - AssertionSometimes it would be DIMMB1 too. I swapped out both B1 and B2 and still had the same issues. Then I ran the memtest86 program via the Proxmox installer's Advanced Options and sure enough it failed after less than a minute, right at the 130GB mark.

I swapped out for 4x 32GB SK Hynix HMA84GR7MFR4N-TF sticks and the memtest ran for a while. Next I tried the Proxmox installer and that ran as expected too.

So 128GB of RAM in the system is good enough for me that I don't want to sink hours more into figuring out why the larger ones aren't working. This has to be a very rare configuration for such a small server with low CPU power, so it wouldn't surprise me if there are hardware limitations. Or I am simply not looking carefully at module speeds, timings, etc.

This book seems to be THE resource on this era and locale of old farmhouse styles. There is a ton of great info about the various types and in fact this is the only material I know of which has grouped them into specific types.

The book could be improved with more example pictures.