Decent enough to get me to read the next one in the series. I would say more but I finished it about a month ago so the memories are fading. Also as usual I read it in 15 minute chunks as I was falling asleep.

Aaron's blog

Termination Shock by Neal Stephenson

An enjoyable book with some interesting action. It is lacking in grand sci-fi concepts other than the sulfur stuff, which I thought for sure was going to be a cargo ship loaded with iron filings based on some commentary I had seen before reading. But there is a good amount of near-future envisionings: drone concepts, live streaming, climate impacts, etc.

The climate topics overall are a no for me. I don't identify with the people who dread climate impacts all day long in real life. So even though I think it is plausible for such things to happen, I guess I really do not care to read a book about it. I think I envision all these "dreaders" also reading the book and being like, "yes, see that is what will happen!" and want to roll my eyes so hard.

I wonder what topics I enjoy reading that would cause such eye rolling by others?

Lastly, I am really not sure what to think of the non-lethal border wars! Is there some kernel of this developing already that I don't know about?

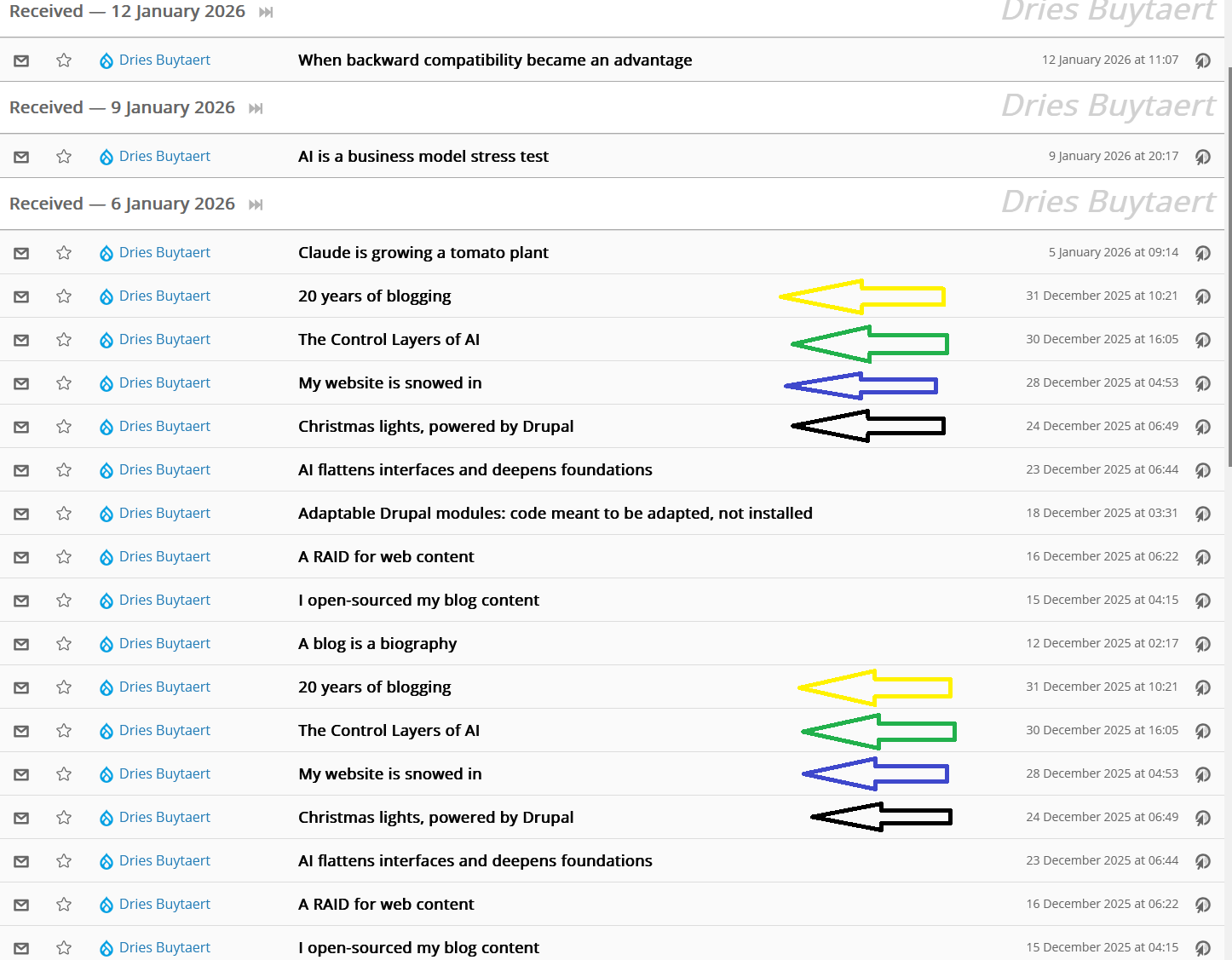

TT-RSS to FreshRSS

Since 2019 I have been running TT-RSS. I started with it on a small Digital Ocean node as I was living on a sailboat at the time. Once I had a house and ISP in 2021 it became one of ~10 VMs that I ran on an old laptop via Debian KVM/QEMU, and a couple years later when I started ramping up my Proxmox cluster and things moved over there.

Also of note: I run a separate PostgreSQL VM with a bunch of databases on it and the TT-RSS database is one of those. And I just run the PHP code straight in the VM, no extra Docker layer.

TT-RSS turbulence

So a few months ago the notorious TT-RSS developer called it quits. I do believe that it will continue to be maintained, but I was pretty sure there are better options. The TT-RSS code is spaghetti. I am somewhat accepting of this because it is similar to the kind of code I tend to write. I am just an OK programmer. So the TT-RSS code is like the kind where you can easily find the lines that do real work, because there are 5,000 lines of it in one file. I have observed that "high-quality" code gets so abstracted out that it nearly all looks like boilerplate. Overall I don't mind the quality of the codebase but it is a signal.

Another issue was the Android app. I have been changing phone about once a year and there is a TT-RSS app somewhere (F-Droid?) that seems legit but doesn't work. This always bogs me down for a bit. Now the app is just discontinued? Probably I could figure it out but it shouldn't be that hard.

Where to?

I didn't do a super thorough search. I wanted something self-hosted, database-backed, very OSS, etc. I found FreshRSS and the demo site seemed OK. There are lots of knobs to twiddle. There are extensions. The GitHub page looks lively. Someone wrote a script to port all feeds and articles from TT-RSS to FreshRSS. Might as well!

(I like to think of my feed reader as an archive of sorts. Maybe 20 of the feeds I have followed over the years have now gone offline entirely. It's kind of a hoarding thing, and kind of a nostalgia thing. I like it. So I wanted to keep all those articles.)

Migration script

Back to that script. Here it is. It worked pretty well and I wrote up my experience in an issue (an abuse of the issue feature I'm sure, sorry!).

I used this script to migrate 125,000 articles from 436 feeds. A batch size of 60000 yielded 3 files of 456MB, 531MB, and 62MB. This ran in under 1 minute and pretty much maxed out the 4GB of RAM on my TT-RSS VM.

So with these file sizes I had to bump all the web server file size limits to 1000MB, PHP memory limit to 1200MB, and various timeout values to 300 seconds. This was fine for the first file, but the second one maxed out that memory limit so I reran with a 3000MB limit. Also by this point I was worried about the 300 seconds being enough so I bumped that to 3000 seconds. And that file ran fine then too. It shouldn't be hard for most people to get better import performance as my database VM storage is slow.

I imported into FreshRSS version 1.28.0. I see that your first patch has been merged, but the second one about the categories still has not. I opted not to figure out how to get the category import to work and will manually categorize them. Yes, all 436 feeds. First of all I will take the opportunity to categorize better than before. Also, I had nested categories in TT-RSS and I'm not sure how that was going to translate to FreshRSS anyways.

Thank you for the script!

Overall it went pretty smooth and I have been using it for about a week. I am happy with it! I have most of my feeds categorized and the dead ones "muted" (should be called "disabled" or something).

Duplicate articles

I did notice an issue with duplicate articles affecting a lot but not all feeds. Say a feed on a website gives the 10 latest articles. TT-RSS had pulled all 10 of those and had them in the database, along with say 90 past articles. So those 100 articles were in the TT-RSS database and were copied to FreshRSS. Then FreshRSS pulled the feed from the website and didn't recognize that those 10 articles already existed in the database, and stuffed them in again. So now there are 10 articles listed twice.

This was a little upsetting but after two days I don't really notice it anymore. I'm guessing that TT-RSS did something dumb with article GUIDs but could be wrong.

Good UI

The FreshRSS UI is pretty good, definitely better than TT-RSS. So I have already set up two features that I just hadn't bothered with in TT-RSS. I have been disabling old feeds so that they don't show up as errored out. And I have set up a few feeds to automatically download full articles when they aren't included in the feeds. This is actually super simple, no extension needed.

Other notes

I miss nested feed categories but don't really need them.

I would love to save article images to my personal S3-compatible archives but there doesn't seem to be an extension capable of doing this.

I haven't set up a mobile app yet. I have heard the website is good enough on mobile so will try that first.

I am not using any extensions yet.

Digikam face tagging performance win

I moved my Digikam database from the local SQLite file to a networked MariaDB server. This was mostly fine but Digikam (8.8.0 version) was performing terribly after any face tagging operation.

I would get frequent Force Quit or Wait? questions from the OS, and the UI would hang for 5-10 seconds. This was always matched with a face scan job kicking off and showing up in the jobs queue. Everything generally worked but was incredibly tedious. ~20,000 images in my collection, BTW.

A few times I went through the DB's config and optimized some things, which helped a little. Typical tuning things like increasing memory and threads, and bumping up max_allowed_packet as recommended. Still the issue persisted.

Eventually I learned that there is a Enable background face recognition scan setting. It seems obvious in retrospect, but this completely fixed the issue. I will just need to periodically rescan for faces now to apply what training has been done since the last run.

Big RAM in a little server

I recently purchased a used SuperMicro SuperServer E300-9A-4C because I had come upon a bunch of 64GB ECC RDIMM sticks from the e-waste pile and supposedly it could handle four of them. This makes for a power efficient way to get 256GB of RAM in a homelab node, although it is a bit weak on CPU at 4 cores. I would have preferred a SYS-5019A-FTN4 with 8 cores but couldn't find used ones for sale.

The used server from eBay of course did not come with a power supply so I had to order one and wait a while. Finally that arrived so I stuffed in 4x Samsung M393A8G40AB2CWE sticks and booted up the Proxmox installer. After a very long wait for memory training or something, it would get to the first screen where I could choose graphical or terminal install. And then after choosing either one I would get the "Loading initial ramdisk" message and then it would freeze at a black screen.

Eventually I found some errors in the IPMI's Server Health Log.

Uncorrectable ECC @ DIMMB2 - AssertionSometimes it would be DIMMB1 too. I swapped out both B1 and B2 and still had the same issues. Then I ran the memtest86 program via the Proxmox installer's Advanced Options and sure enough it failed after less than a minute, right at the 130GB mark.

I swapped out for 4x 32GB SK Hynix HMA84GR7MFR4N-TF sticks and the memtest ran for a while. Next I tried the Proxmox installer and that ran as expected too.

So 128GB of RAM in the system is good enough for me that I don't want to sink hours more into figuring out why the larger ones aren't working. This has to be a very rare configuration for such a small server with low CPU power, so it wouldn't surprise me if there are hardware limitations. Or I am simply not looking carefully at module speeds, timings, etc.

Homes in the Heartland: Balloon Frame Farmhouses of the Upper Midwest

This book seems to be THE resource on this era and locale of old farmhouse styles. There is a ton of great info about the various types and in fact this is the only material I know of which has grouped them into specific types.

The book could be improved with more example pictures.

The Rise and Fall of D.O.D.O. by Neal Stephenson and Nicole Galland

The overall premise of magic dying out for the given reasons is pretty clever overall, and the time travel mechanism/effects holds up to some examination. I find the historical setting to be fun and pretty well detailed in this book.

The "found documents" format is tedious, and especially so for my eBook situation due to some formatting difficulties. I think quality dropped a bit towards the end as some plot points became less plausible.

Hatch Rest+ 2nd gen teardown

Our Hatch Rest+ 2nd gen lost all battery functionality. If lifted off of the wireless charging base for a fraction of a second it would die. It was replaced under warranty, and customer support even saw the second one on the same account and said it would eventually develop the same issue. So they replaced that one too! Great customer service, which is really what is necessary to make the best out of what I'm sure is a bad situation for them.

Most exciting for me about the whole situation was the opportunity to tear apart the old one!

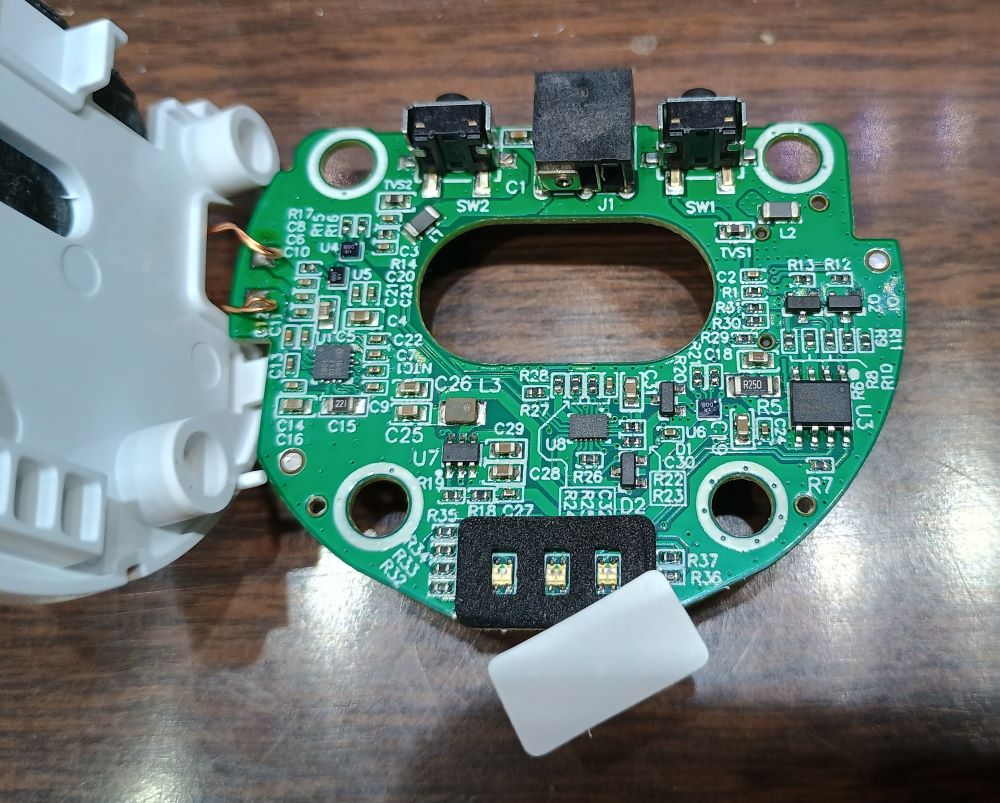

Tearing off the rubber pad on the bottom reveals some screws, and then the wireless charging module comes out. Also found here are the barrel jack, and the power and sync buttons.

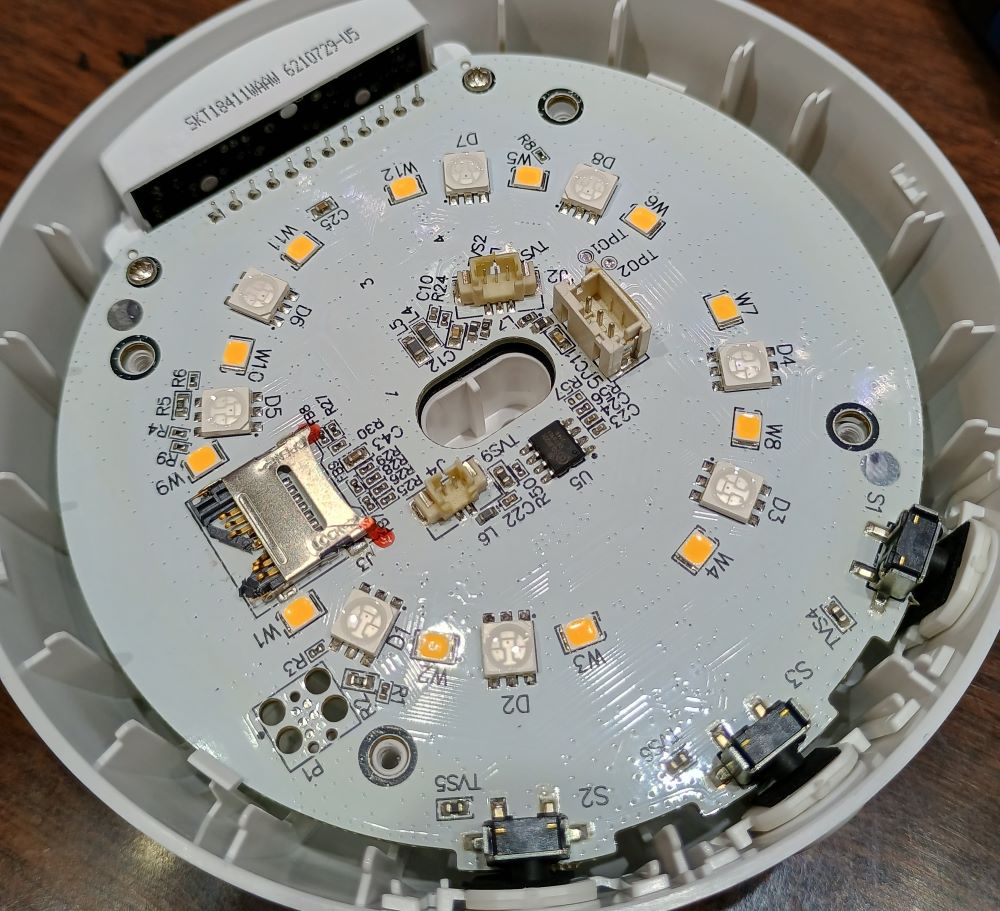

Here is the component side of the wireless charging board:

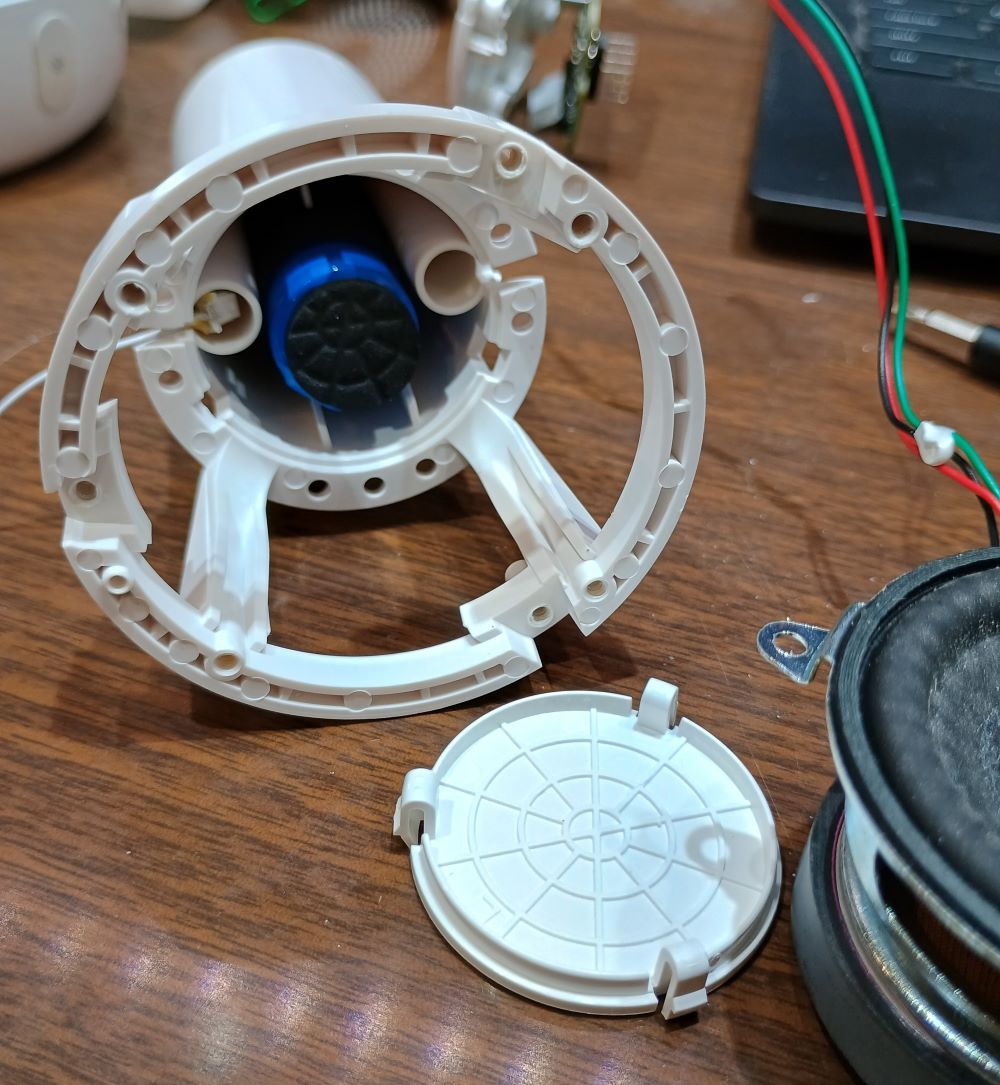

With the removal of the two middle screws shown in the first picture, the top of the cone comes off. There are some tight wires. The battery and speaker ones each have three wires and seemed to pop off easily. The single wire is for the metal touch ring and I think I stretched that one to a damaging extent. I didn't plan to put it back together though.

Access through the top is by prying off the speaker grill:

The speaker is held on by a few screws:

And within there is the battery:

The battery is a single 18650 cell:

It has a BMS. The voltage directly on the battery was effectively 0V.

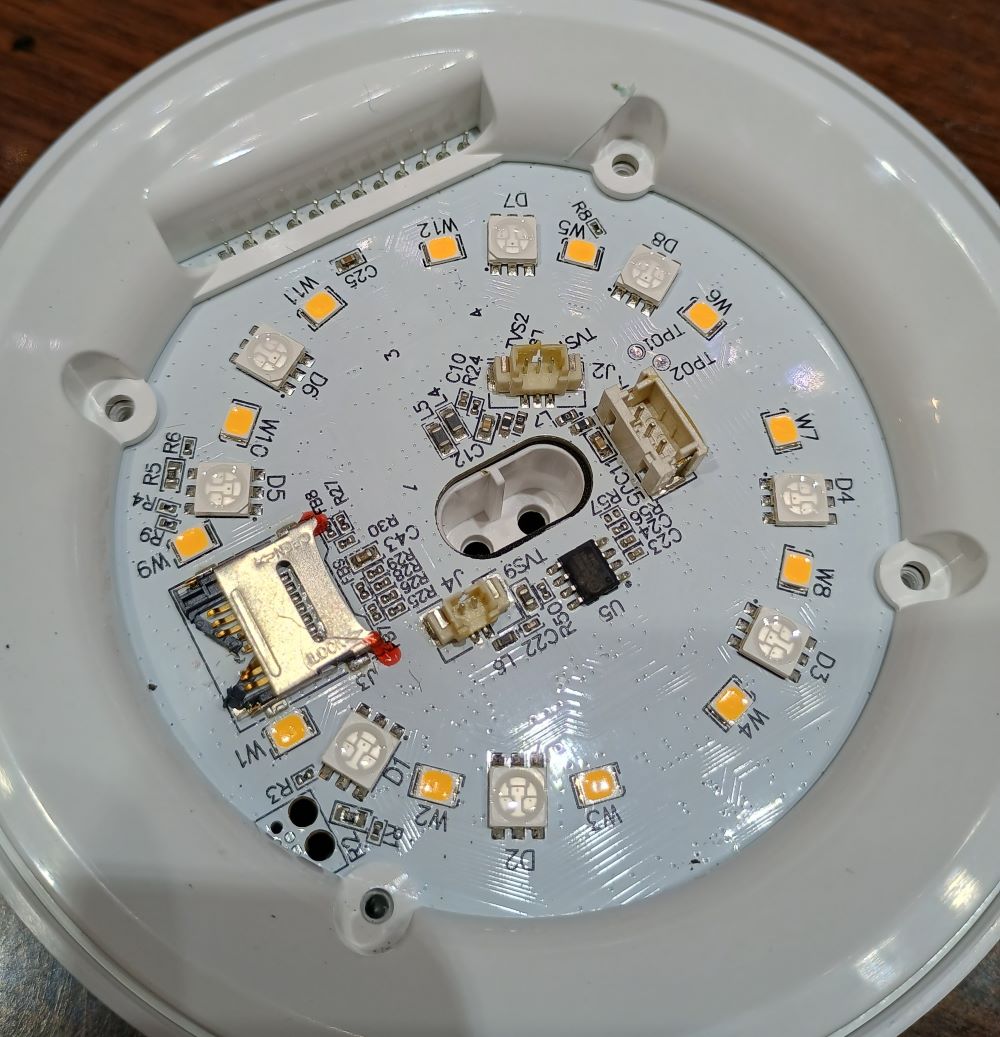

Now the main board. This picture is after I had pried the SD Card out. You can see that I damaged the card holder, but the card itself was fine. Like many of the cable plugs within, it had a small dab of glue on it.

The SD card is 16GB and has a FAT32 filesystem. I found about 7GB of mostly files on there. Curiously, it seems to have all of the files for several Hatch products. Folders include:

- Rest_Sounds

- RestMini_Sounds

- Restore_Sounds

- Sleep_Sounds

Another folder is named Downloaded and contains two interesting files. BeRIOTGameMusicTTR_20220607.mp3 is an hour-long rendition of what I think is the Peaceful Flute music. And RIOT_BrownNoise_CGV3_20240115.wav is a short clip of white noise.

Removed the plastic ring:

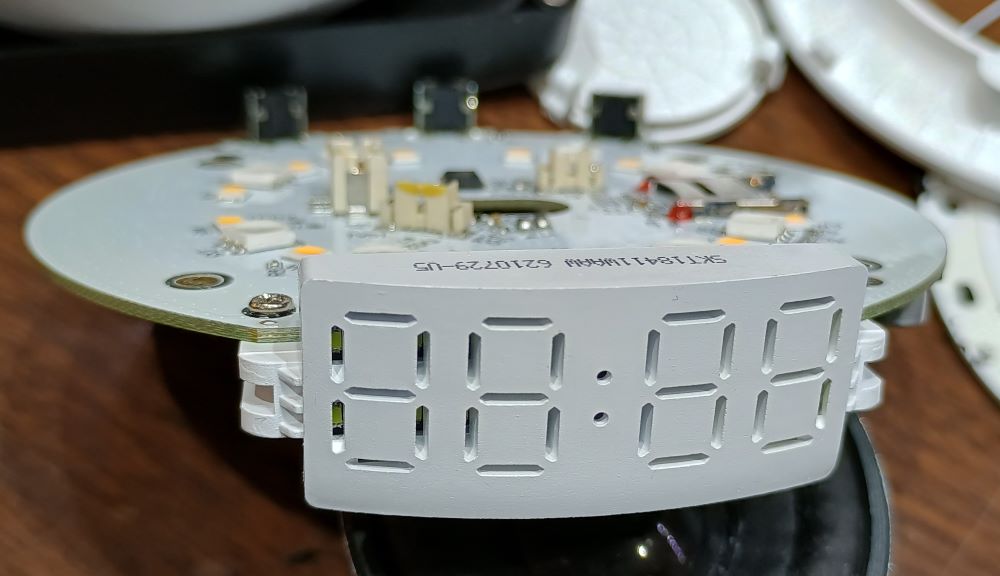

Here is the 7 segment display. It appears to be individual LEDs behind 7 slots.

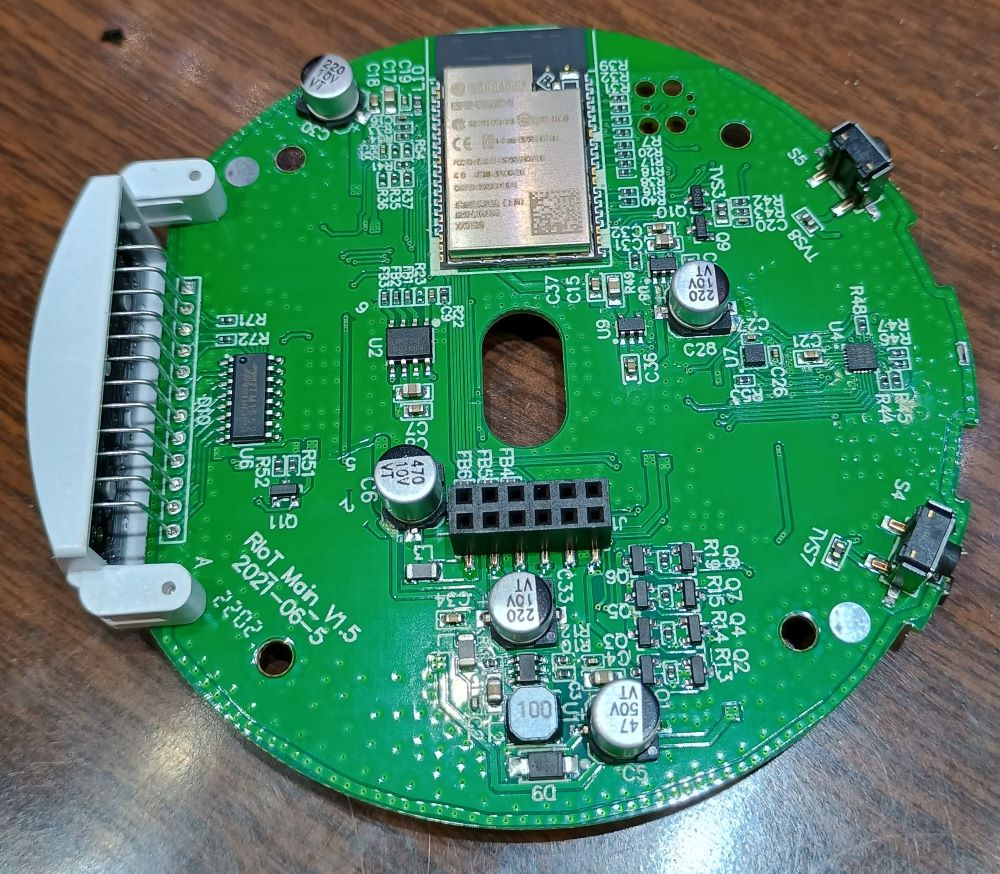

Here is the flip side of that main board:

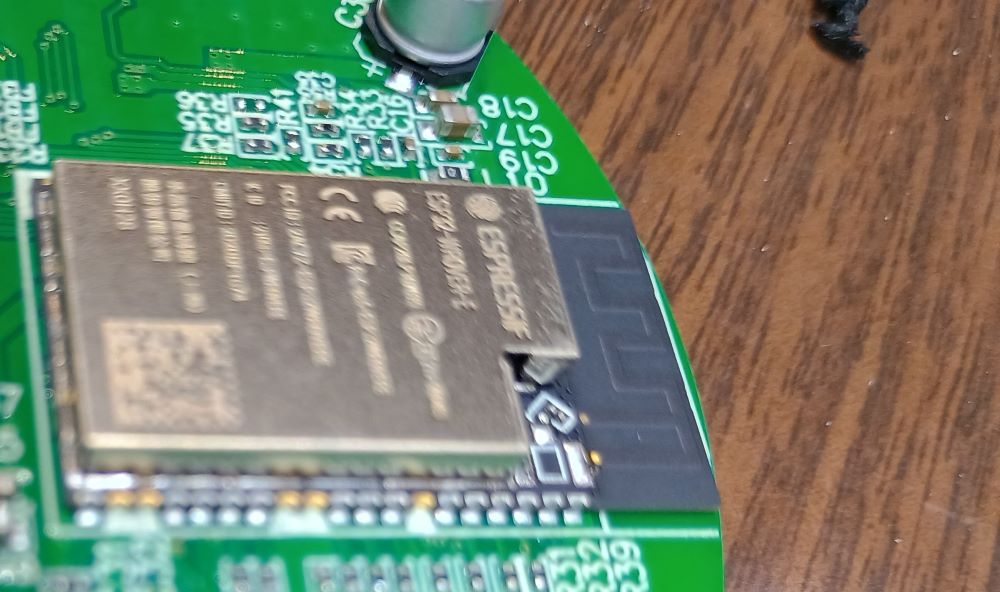

Espressif ESP32-WROVER-E processor:

git commit murder by Michael W Lucas

A mystery novel that takes place at a BSD conference--hilarious premise. OK execution.

Run Your Own Mail Server by Michael W Lucas

I set up my own mail server about a year before reading this book so the timing wasn't great, but still this was a good read. And fortunately I had chosen almost exactly the same software stack.

I will probably use this to go back through and fine tune a few things on my server...eventually. The rspamd config comes to mind. I don't think it is updating any definitions as I mark new messages as Junk.

This book was great for putting me to sleep. But that's OK! It probably isn't great to read something that amps you up and keeps you up.